I am launching a new non-profit AI safety research organization called LawZero, to prioritize safety over commercial imperatives. This organization has been created in response…

Comments closedCategory: AI safety

This paper was initially published by the Aspen Strategy Group (ASG), a policy program of the Aspen Institute. It was released as part of a…

Comments closedAs we move towards more powerful AI, it becomes urgent to better understand the risks, ideally in a mathematically rigorous and quantifiable way, and use…

Comments closedWhat can’t we afford with a future superintelligent AI? Among others, confidently wrong predictions about the harm that some actions could yield. Especially catastrophic harm.…

Comments closedOn May 31st, 2023, a BBC web page headlined: “AI ‘godfather’ Yoshua Bengio feels ‘lost’ over life’s work.” However, this is a statement I never…

Comments closedThe capabilities of AI systems have steadily increased with deep learning advances for which I received a Turing Award alongside Geoffrey Hinton (University of Toronto)…

Comments closedI have been hearing many arguments from different people regarding catastrophic AI risks. I wanted to clarify these arguments, first for myself, because I would…

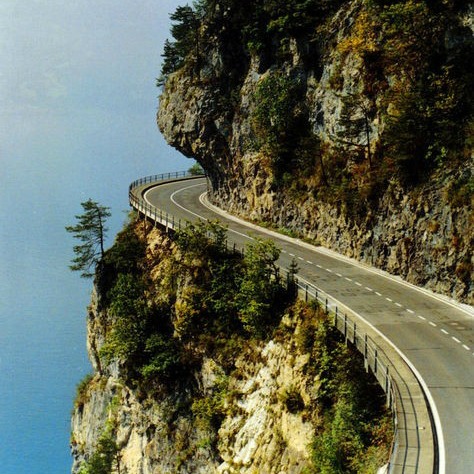

Comments closedScenarios giving rise to potentially rogue AIs, from intential genocidal behavior of some humans to unintentional catastrophe arising because of AI misalignment.

Comments closedThere have recently been lots of discussions about the risks of AI, whether in the short term with existing methods or in the longer term…

Comments closed